Inter-process communication (or IPC) doesn’t typically cause programmers to feel warm fuzzies. “Wait, I just want my programs to talk to each other, why is this hard???” is a sentiment that I very much understand. Recently when working on Mixologist I came to a point where I needed a CLI, a GUI, and a daemon to all run and communicate with one another, sending info back in forth in a wonderfully concurrent, unordered fashion. Those two words being used together usually send shivers down the spine of any experienced programmer but, with a little legwork, the problem is solveable.

Humble Beginnings

Let’s go back to the beginning; I started my work on Mixologists IPC requiring only a daemon and a CLI. This allowed me to dip my toes into the waters of IPC without having to go straight into the deep end. After doing some cursory research, I had a few options to pursue:

- Shared Memory

- Pipes

- Sockets

Initially, shared memory looked decently promising since it would give me full access to the shdared state of each process from any of the others but I ultimately decided against it simply because of the synchronization headache and the difficulty around creating custom clients to interact with my program. Pipes were also a no-go unfortunately because I expected to need bidirectional communication. This left me with sockets, probably one of the most common tools for IPC both due to their flexibility and relative ease of use.

So how do you make a socket?

| |

Well that isn’t too bad.

Unfortunately, there is some extra work to do depending on if you’re the server or the client. If you’re the server, the setup is as follows:

| |

So what did that do? Well, we start by initially setting up the

socket and its address, just giving it a name on the filesystem

to use. We then bind the socket which is what actually sets

up the socket on the system. Finally, we listen on the socket

which allows us to accept incoming connections.

The client side is simpler:

| |

The setup is the same as for the server but instead of bind and

listen, we just connect. This can of course error (the socket

may not exist) but that is something we can handle on the caller side.

Some of you may have noticed that I’m using .UNIX and .STREAM for

the socket type and are asking “what’s that all about?” We are making

use of Unix domain sockets

here instead of INET sockets since they are targeted towards local

use. We are also making use of stream sockets instead of datagram

sockets because knowing about the connection itself is important.

Datagram sockets can be extremely useful when you rely on clearly defined package boundaries and don’t require an order. They don’t have the concept of a “connection” however which can make certain things harder to write.

Unidirectional Communication

Let’s look at the interface for the CLI I built:

Flags:

-add-program:<string>, multiple | name of program to add to aux

-remove-program:<string>, multiple | name of program to remove from aux

-set-volume:<f32> | volume to assign nodes

-shift-volume:<f32> | volume to increment nodes

So, as it stands, the cli only needs to send things to the daemon.

That makes it so that we only ever need to call send on the client

to get the data sent over to the server which is relatively simple:

| |

On the server side, we do the following:

| |

This is pretty simple but the minute we try and run the program, we’ll

notice that the event loop just… stalls. The reason that happens is

because the socket is blocking. That means that whenever we call recv

on the socket, program execution will halt until the socket recieves some

data. So how do we solve this? Well fortunately, when you create a socket,

you can add a single flag, changing the instantiation to this:

| |

Now when we call recv, we can check recv_err to see if it is either

EWOULDBLOCK or EAGAIN and if so, skip any subsequent code that would

depend on the result of a finished transmission.

Checking both is something you should do for portability reasons. Unfortunately, you can’t assume that these error codes are the same value.

With that change in place, we can now run any of our commands (i.e.

mixcli -set-volume:0) and hear (this is an audio program after all)

the results in real-time. Unfortunately, although we do have data

going to the daemon, we don’t have anything coming back. That’s a

bit of an issue if we want to add something like -get-volume

which could be good for scripts and other tools.

Bidirectional Communication

So how might we add that? Well, on the client we can do this:

| |

On something like the CLI, we actually don’t want the socket to be

blocking. Instead, we can use a normal non-blocking socket and just have

recv block until we get a response from the daemon.

On the daemon side, we can do some simple message processing and then craft a response to send back to the CLI:

| |

Now, when we run our program:

mixcli -get-volume

0.2

we get the expected response.

The N+1 Problem

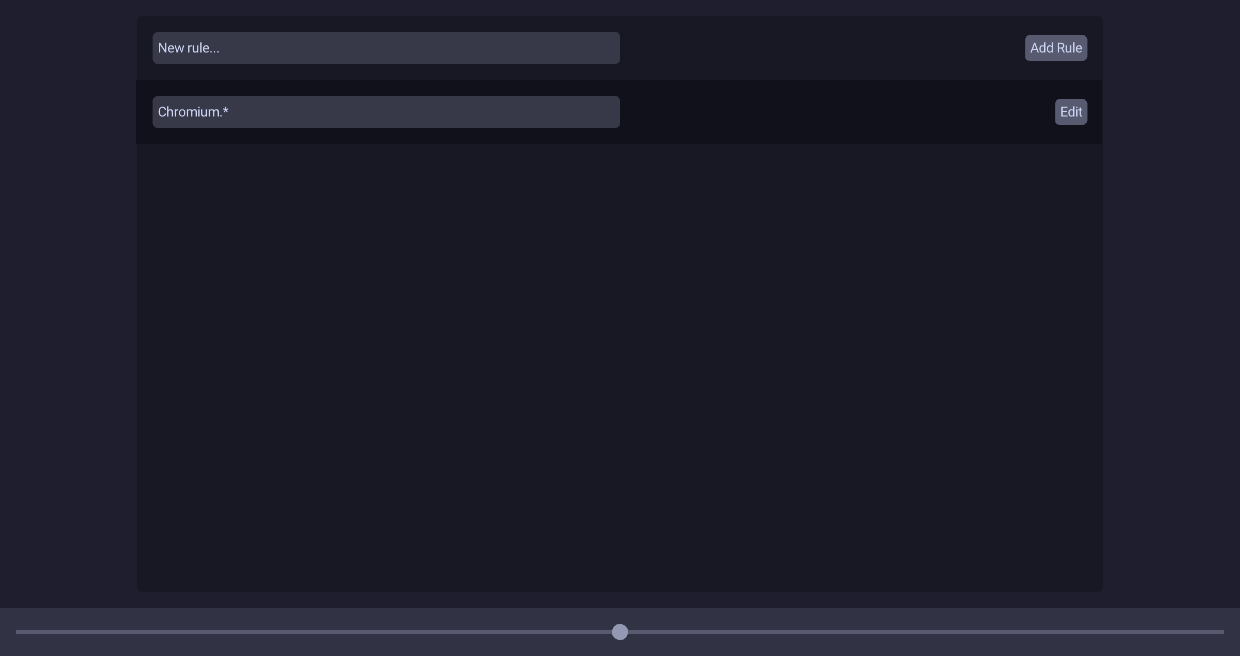

With a CLI working, it’s time to add a GUI. Let’s start by roughing out the GUI with no actual backend plumbing:

So, what data is needed by the program? Well, the list of program rules that

should populate the text box list and the volume assigned to mixd itself is needed.

This however, will be handled by inotify watching the config for the time being.

We also want changes to the volume slider (bottom) though. These changes should of

course be sent to mixd but we also want changes made to the volume by other programs

(i.e. mixcli or another GUI instance) to show up here. That means that we

probably need a way to “subscribe” to changes to the volume. That means we

will need:

- Managing of multiple simultaneous connections

- Persistent connections

- A way to handle disconnected clients

- Tracking of “subscribers”

In a previous post, I mentioned the basic message-passing format I was using to send data from the client to the CLI. We will make a couple changes here though:

| |

Note: we use CBOR to encode the message as it is trivially serialized, has a relatively low size, and is quite resilient to format changes.

So, these Get and Subscribe messages will allow us as users

to make a request to the server telling it that we want all future

updates to the volume. On the client, this is relatively simple:

| |

On the server however, we have an issue now: our current implementation only allows for a single connected client. That means if we want to keep the connection open to send data back to the client, we’ll need to have a way of managing multiple sockets at once.

Enter poll()

Fortunately, there are tools that exist to deal with many sockets but there are two that I considered in this case:

- poll

- epoll

Although epoll was attractive because of it’s better asymptotic

performance, I decided against it for two reasons:

- More complex to set up

- Low number of sockets

So, on the server, we can start creating an IPC system, here’s the state for it:

| |

We then initialize it like so:

| |

core:containers/small_arrayis really nice here since it gives us dynamic array semantics on a fixed size array, allowing us to stack allocate it.

With our basic server set up, we can now run the following code every cycle of the event loop:

| |

In this case, we poll() all active clients and also check if we

are able to accept() on the server socket. If a new connection is

active, we add it to the list of clients. We can then iterate over the

list of clients and call read() on them. In practice, the result looks like

this:

| |

#reverseis a surprise tool that will help us later

Subscriptions

Since we now have the option to handle multiple sockets concurrently, how do we handle subscriptions? Well, we can add the following field to our context struct:

| |

This is just a simple list that we can use to keep track of all of our volume subscribers. If we recieve a subscribe message, we just add the subscriber to the list of potential subscribers:

| |

Now, when we update the volume, we can just send a message out to each subscriber:

| |

This is all well and good but as it stands, we still don’t know when

a client has disconnected or how we should manage that. Well, fortunately

with poll() we can just check if reading from the socket returns

zero bytes and remove it from the connections list.

| |

the

#reversementioned earlier allows us to callunordered_removewithout invalidating the iterator

After processing all the messages, we can also manage removing all the resources for each removed client:

| |

Although we could call it a day here, we might also want to do

some extra work, most notably around handling if the socket

has not gracefully been closed. Fortunately, this is just

another if:

| |

Abstract Sockets

Before we finish up, there is a final concept that is extremely nice to have when using Unix domain sockets on Linux: abstract sockets. These are a non-portable, Linux-only extension that can be accessed by having the first byte of the socket path be NUL. This makes it so that the socket has no connection to filesystem pathnames and will automatically disappear when all references to the socket are closed (although there does seem to be a timer on this).

Putting it All Together

So what does this leave us with? Well, we effectively have ended up with a single-threaded server that is tailored to handling a custom format that we created. Being single-threaded allows us to avoid having to use synchronization primitives while the limited number of potential clients prevents this from becoming an issue.

So to summarize, on the server:

- Make a server struct to track the following

- Active sockets

- Sockets to remove

- Subscriptions

- Create a nonblocking socket

- Add the socket to the list of active sockets

- Poll list of active sockets and process their events

- Clean up sockets that are no longer connected

on a client like a GUI:

- Open a socket

- Send a “Subscribe” message

- Recieve all future events

- Send all updates to the client

and on a client like a CLI:

- Open a socket

- Send message

- Listen for response if applicable

A more complete implementation can be found in the Mixologist repo if more examples are needed.

So, hopefully this helped you if you made it this far. Thanks for reading and have a great day!